WRF v3.6.1

ACM2-PX Sensitivity Test Description

- Code

- Domain

- Model

- Initialization

- Cases

- Verification

Codes Employed

The components of the end-to-end forecast system used in the ACM2-PX Sensitivity Test included:

• WRF Preprocessing System (WPS) (v3.6.1)

• Gridpoint Statistical Interpoloation (GSI) (v3.3)

• WRF-ARW model (v3.6.1)

• Unified Post Processor (UPP) (v2.2)

• Model Evaluation Tools (MET) (v5.0)

• NCAR Command Language (NCL) for graphics generation

• Statistical programming language, R, to compute confidence

intervals

Domain Configuration

• Contiguous U.S. (CONUS) domain with 15-km grid spacing

Click thumbnail for larger image.

Click thumbnail for larger image.

• 403 x 302 gridpoints, for a total of 121,706 horizontal gridpoints

• 56 vertical levels (57 sigma entries); model top at 10 hPa

• Lambert-Conformal map projection

Sample Namelists

• namelist.wps

• namelist_AFWAOC.input

• namelist_ACM2.input

AFWA Reference Configuration

| Microphysics: | WRF Single-Moment 5 Scheme (opt 4) |

| Radiation: | RRTMG (opt 4) |

| Surface Layer: | Monin-Obukhov Similarity Theory (opt 91) |

| Land Surface: | Noah (opt 2) |

| PBL: | Yonsei University Scheme (opt 1) |

| Convection: | Kain-Fritsch Scheme (opt 1) |

• Sample namelist.input

ACM2-PX Replacement Configuration

| Microphysics: | WRF Single-Moment 5 Scheme (opt 4) |

| Radiation: | RRTMG (opt 4) |

| Surface Layer: | Pleim Xiu (opt 7) |

| Land Surface: | Pleim Xiu (opt 7) |

| PBL: | Asymmetric Convective Model 2 (opt 7) |

| Convection: | Kain-Fritsch Scheme (opt 1) |

• Sample namelist.input

Other run-time settings

• Long timestep = 90 s; Acoustic step = 4

• Calls to the boundary layer and microphysics parameterization

were made every time step

• Calls to the cumulus parameterization were made every 5

minutes

• Calls to radiation were made every 30 minutes

Initial and Boundary Conditions

• Initial conditions (ICs) and Lateral Boundary Conditions

(LBCs): 0.5 x 0.5 degree Global Forecast System (GFS) model

• Lower Boundary Conditions (LoBCs): Output from

the AFWA Land Information System (LIS) utilizing the Noah

land surface model v2.7.1

• SST Initialization: Fleet Numerical Meteorology and

Oceanography Center (FNMOC) daily, real-time sea surface

temperature (SST) product

Preprocessing Component

The time-invariant component of the lower boundary conditions (topography, soil and vegetation type etc.), using United States Geological Survey (USGS) input data, was generated through the geogrid program of WPS. The avg_tsfc program of WPS was also used to compute the mean surface air temperature in order to provide improved water temperature initialization for lakes and smaller bodies of water in the domain that are further away from an ocean.

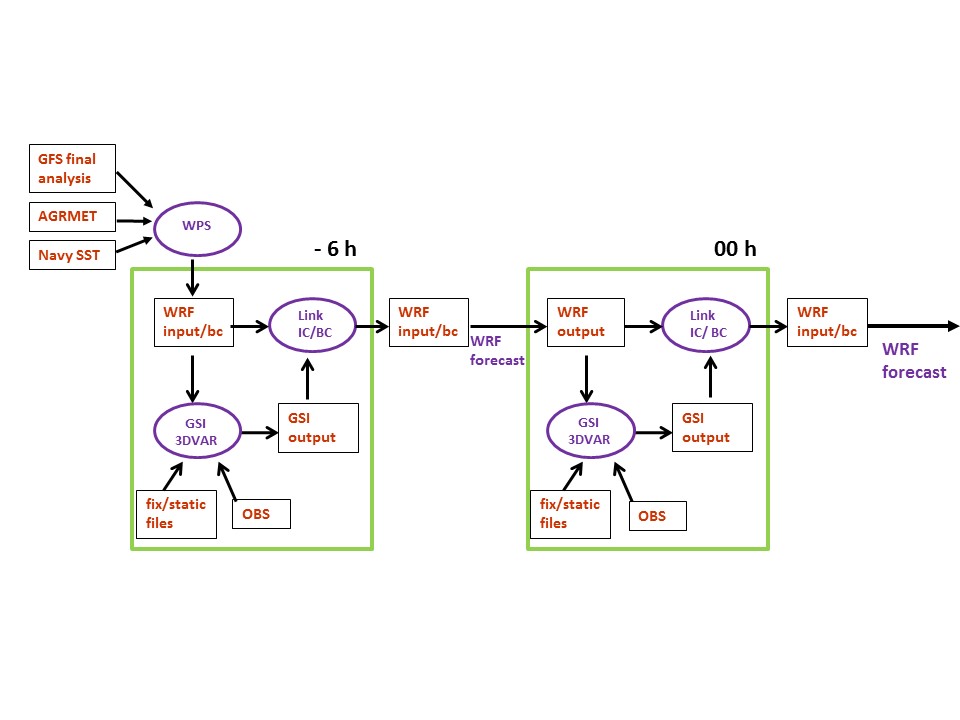

Data Assimilation Component

A 6-hour "warm start" spin-up procedure preceded each forecast (see figure below). Data assimilation was conducted using GSI at the beginning and the end of the 6-hour warm start window using observation data files provided by AFWA. At the beginning of the data assimilation window, the GFS derived initial conditions were used as the model background, and at the end of the window, the 6-hour WRF forecast initialized by the GSI analysis was used. After each WRFDA run, the LBCs derived from GFS were updated and used in the subsequent forecasts.

Click thumbnail for larger image.

Cases Run

• Forecast Dates: 1 August 2013 - 31 August 2014

• Initializations: Every 36 hours, including both 00 and 12 UTC

• Forecast Length: 48 hours; output files generated every 3 hours

| 00 UTC Initializations |

| August 2013: 3, 6, 9, 12, 15, 18, 21, 24, 27, 30 |

| September 2013: 2, 5, 8, 11, 14, 17, 20, 23, 26, 29 |

| October 2013: 2, 5, 8, 11, 14, 17, 20, 23, 26, 29 |

| November 2013: 1, 4, 7, 10, 13, 16, 19, 22, 25, 28 |

| December 2013: 1, 4, 7, 10, 13, 16, 19, 22, 25, 28, 31 |

| January 2014: 3, 6, 9, 12, 15, 18, 21, 24, 27, 30 |

| February 2014: 2, 5, 8, 11, 14, 17, 20, 23, 26 |

| March 2014: 1, 4, 7, 10, 13, 16, 19, 22, 25, 28, 31 |

| April 2014: 3, 6, 9, 12, 15, 18, 21, 24, 27, 30 |

| May 2014: 3, 6, 9, 12, 15, 18, 21, 24, 27, 30 |

| June 2014: 2, 5, 8, 11, 14, 17, 20, 23, 26, 29 |

| July 2014: 2, 5, 8, 11, 14, 17, 20, 23, 26, 29 |

| August 2014: 1, 4, 7, 10, 13, 16, 19, 22, 25, 28, 31 |

| 12 UTC Initializations |

| August 2013: 1, 4, 7, 10, 13, 16, 19, 22, 25, 28, 31 |

| September 2013: 3, 6, 9, 12, 15, 18, 21, 24, 27, 30 |

| October 2013: 3, 6, 9, 12, 15, 18, 21, 24, 27, 30 |

| November 2013: 2, 5, 8, 11, 14, 17, 20, 23, 26, 29 |

| December 2013: 2, 5, 8, 11, 14, 17, 20, 23, 26, 29 |

| January 2014: 1, 4, 7, 10, 13, 16, 19, 22, 25, 28, 31 |

| February 2014: 3, 6, 9, 12, 15, 18, 21, 24, 27 |

| March 2014: 2, 5, 8, 11, 14, 17, 20, 23, 26, 29 |

| April 2014: 1, 4, 7, 10, 13, 16, 19, 22, 25, 28 |

| May 2014: 1, 4, 7, 10, 13, 16, 19, 22, 25, 28, 31 |

| June 2014: 3, 6, 9, 12, 15, 18, 21, 24, 27, 30 |

| July 2014: 3, 6, 9, 12, 15, 18, 21, 24, 27, 30 |

| August 2014: 2, 5, 8, 11, 14, 17, 20, 23, 26, 29 |

The tables below list the forecast initializations that failed to complete the end-to-end process due to the reasons described in the table. All incomplete forecasts were due to missing or bad input data sets, not model crashes.

Missing Forecasts:| Affected Case | Missing Data | Reason |

| 2013080112 | WRF Output | Missing SST input data |

| 2013080900 | WRF Output | Missing GFS data |

| 2013081912 | WRF Output | Missing SST input data |

| 2013082100 | WRF Output | Missing GFS data |

| 2013091100 | WRF Output | Missing input observation data |

| 2013120400 | WRF Output | Bad SST input data |

| 2013122612 | WRF Output | Missing input observation data |

| 2013122800 | WRF Output | Missing SST, LIS, and input observation data |

| 2013122912 | WRF Output | Missing SST, LIS, and input observation data |

| 2013123100 | WRF Output | Missing SST, LIS, and input observation data |

| 2014010112 | WRF Output | Missing SST input data |

| 2014010900 | WRF Output | Missing SST and LIS input data |

| 2014011012 | WRF Output | Missing SST and LIS input data |

| 2014012212 | WRF Output | Bad SST input data |

| 2014020200 | WRF Output | Missing GFS data |

| 2014020312 | WRF Output | Missing GFS data |

| 2014030400 | WRF Output | Bad SST and missing LIS input data |

| 2014031412 | WRF Output | Missing LIS input data |

| 2014031712 | WRF Output | Bad SST input data |

| 2014041800 | WRF Output | Bad SST input data |

| 2014061812 | WRF Output | Missing SST, LIS andinput observation data |

| 2014062000 | WRF Output | Missing SST, LIS andinput observation data |

| 2014062112 | WRF Output | Missing SST, LIS andinput observation data |

| 2014062300 | WRF Output | Missing SST, LIS andinput observation data |

| 2014062412 | WRF Output | Missing SST, LIS andinput observation data |

| 2014062600 | WRF Output | Missing SST, LIS andinput observation data |

| 2014062712 | WRF Output | Missing SST, LIS andinput observation data |

| 2014062900 | WRF Output | Missing SST, LIS andinput observation data |

| 2014063012 | WRF Output | Missing SST, LIS andinput observation data |

| 2014070200 | WRF Output | Missing SST, LIS andinput observation data |

| 2014070312 | WRF Output | Missing SST, LIS andinput observation data |

| 2014070500 | WRF Output | Missing SST, LIS andinput observation data |

| 2014070612 | WRF Output | Missing SST, LIS andinput observation data |

| 2014070800 | WRF Output | Missing SST, LIS andinput observation data |

| 2014070912 | WRF Output | Missing SST, LIS andinput observation data |

| 2014071100 | WRF Output | Missing SST, LIS andinput observation data |

| 2014071212 | WRF Output | Missing SST, LIS andinput observation data |

| 2014071400 | WRF Output | Missing SST, LIS andinput observation data |

| 2014071512 | WRF Output | Missing SST, LIS andinput observation data |

Verification

The Model Evaluation Tools (MET) package, comprised of:

• grid-to-point comparisons - utilized for surface and upper air

model data

• grid-to-grid comparisons - utilized for QPF

was used to generate objective verification statistics, including:

• Bias-corrected Root Mean Square Error (BCRMSE) and Mean

Error (Bias) for:

• Surface: Temperature (2 m), Dew Point Temperature (2 m)

and Winds (10 m)

• Upper Air: Temperature, Dew Point Temperature and Winds

• Gilbert Skill Score (GSS) and Frequency Bias (FBias) for:

• 3-hr and 24-hr Precipiation Accumulation intervals

Each type of verification metric is accompanied by confidence intervals (CIs), at the 99% level, computed using a parametric method for the surface and upper air variables and a boostrapping method for precipitation.

Both configurations were run for the same cases allowing for a pair-wise difference methodology to be applied, as appropriate. The CIs on the pair-wise differences between statistics for the two configurations objectively determines whether the differences are statistically significant (SS).

To establish practical significance (PS) in order to examine SS pair-wise differences that are potentially more meaningful, the data was censored to only look at differences that were greater than the operational measurement uncertainty requirements as defined by the World Meteorological Organization (WMO). The following criteria were applied to determine PS pair-wise differences between the configurations and versions for select variables: i) temperature and dew point temperature differences greater than 0.1K and ii) wind speed differences greater than 0.5 ms-1.

Area-averaged verification results were computed for the full and CONUS domains, East and West domains, and 14 sub-domains.